The National Transportation Safety Board urged government regulators and manufacturers to implement safeguards for automated vehicle technology, delivering strong words to both Tesla and NHTSA to act.

The board held a public hearing Tuesday to determine the probable cause of the March 23, 2018, fatal crash of a Tesla Model X in Mountain View, Calif.

The crash on U.S. 101 killed its driver, Walter Huang, who was using Tesla Inc.’s advanced driver-assistance system known as Autopilot. According to performance data downloaded from the crash vehicle, Huang was using traffic-aware cruise control and autosteer lane-keeping assist, both ADAS features that are part of Tesla’s system.

Huang, a 38-year-old Apple Inc. software engineer, had Autopilot engaged continuously in the last 18 minutes and 55 seconds before his car struck a highway barrier at approximately 71 mph. The vehicle provided two visual and one auditory alerts for the driver to place his hands on the steering wheel, according to the preliminary report. The driver’s hands were not detected on the steering wheel in the six seconds before the crash, the NTSB said.

Records reviewed by the board also found Huang was playing a video game on his iPhone before the crash, though it could not determine whether he was actively engaged with the game or just holding the device.

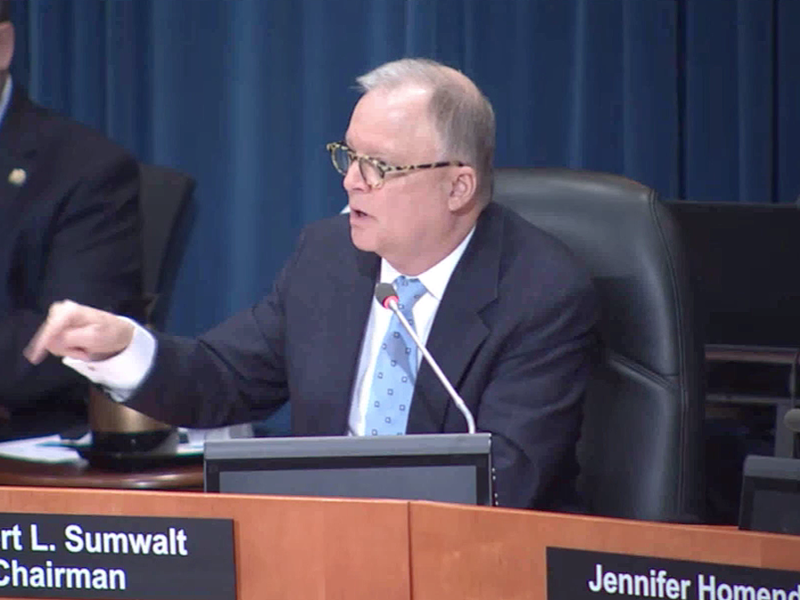

But a vehicle with partial automation is not a fully automated vehicle, NTSB Chairman Robert Sumwalt said in the hearing.

“This means that when driving in the supposed self-driving mode, you can’t sleep. You can’t read a book. You can’t watch a movie or TV show. You can’t text,” he added. “And you can’t play video games.”

Sumwalt said it was the “lack of system safeguards” that struck him the most in the investigative findings.

“The industry in some cases is ignoring the NTSB’s recommendations intending to help prevent such tragedies,” Sumwalt said. “Equally disturbing is that government regulators have provided scant oversight, ignoring — in some cases — this board’s recommendations for system safeguards.”

In the meeting, NTSB investigators identified seven key safety issues involving driver distraction, limitations of collision-avoidance systems and insufficient federal oversight of partial automation systems, among other areas. NTSB investigators also called on phone manufacturers such as Apple to develop “distracted-driving lockout mechanisms” that would be installed as a default setting on all phones.

In summary, the board also made nine safety recommendations, including an expansion of NHTSA’s New Car Assessment Program testing of forward collision avoidance system performance, further evaluation of Autopilot-equipped vehicles, “collaborative development” of standards for driver monitoring systems to minimize driver disengagement, and several proposals covering cellphone use while driving and enforcement strategies for reducing distracted driving.

Automotive News has reached out to NHTSA for comment.

NHTSA’s Special Crash Investigations unit has opened 14 separate probes of Autopilot, including two ongoing examinations of two separate fatal crashes that occurred Dec. 29, 2019. But NHTSA is waiting for problems to occur rather than addressing safety proactively, board members said during the hearing.

NTSB investigator Don Karol said both the Department of Transportation and NHTSA have taken a “hands-off approach to automated-vehicle safety.”

“While DOT has issued some policy guidance for higher levels of automation — Level 3 to Level 5 — [there is] little to no guidance where standards exist for partial driving-automation systems currently on our highways today,” he said.

The Center for Auto Safety, a Washington-based nonprofit consumer advocacy group, pointed to leadership at NHTSA as “the real villain in this story,” calling out the agency for “failing to recall or regulate” systems such as Autopilot.

“[Transportation] Secretary [Elaine] Chao has repeatedly claimed that NHTSA will not hesitate to act in the interest of public safety. At this point, those words have proven to be all talk and no action,” Jason Levine, the group’s executive director, said in an emailed statement before the Tuesday hearing.

Consumer Reports, another advocate for consumers, stressed the need for “key safety features in any vehicle” with ADAS.

“This shouldn’t be considered optional,” Ethan Douglas, senior policy analyst for cars and product safety at Consumer Reports, said in a statement. “Manufacturers and NHTSA must make sure that these driver-assist systems come with critical safety features that actually verify drivers are monitoring the road and ready to take action at all times. Otherwise, the safety risks of these systems could end up outweighing their benefits.”

The Mountain View crash is the fourth investigation the safety board has taken up in recent years involving Tesla’s Autopilot.

In 2017 — following the probe of a fatal May 7, 2016, crash of a Tesla Model S near Williston, Fla. — the NTSB issued several new safety guidelines and sent recommendations to NHTSA, the Department of Transportation and, at the time, the Alliance of Automobile Manufacturers and Global Automakers.

The board also sent recommendations to six automakers, including Volkswagen, BMW, Nissan, Mercedes-Benz and Volvo.

“And of the six, five of those manufacturers have responded favorably, stating that they are working to implement that recommendation,” Sumwalt said. “But sadly, one manufacturer has ignored us. And that manufacturer is Tesla.”

Sumwalt said it has been 881 days since the recommendations — which include the need for system safeguards that limit the use of automated vehicle-control systems “to those conditions for which they were designed” and to develop applications to more effectively sense the driver’s level of engagement — were sent to Tesla.

“We’re still waiting,” he added.