For highly automated cars, seeing what’s ahead of and around the vehicle is critical. But seeing unoccupied space can provide a more complete picture — by literally filling in the blanks.

Arbe Robotics Ltd., a leader in 4D imaging radar systems for advanced driver-assist systems and autonomous driving, plans to highlight its free-space mapping, a critical refinement to its perception programming and sensors, this week at CES.

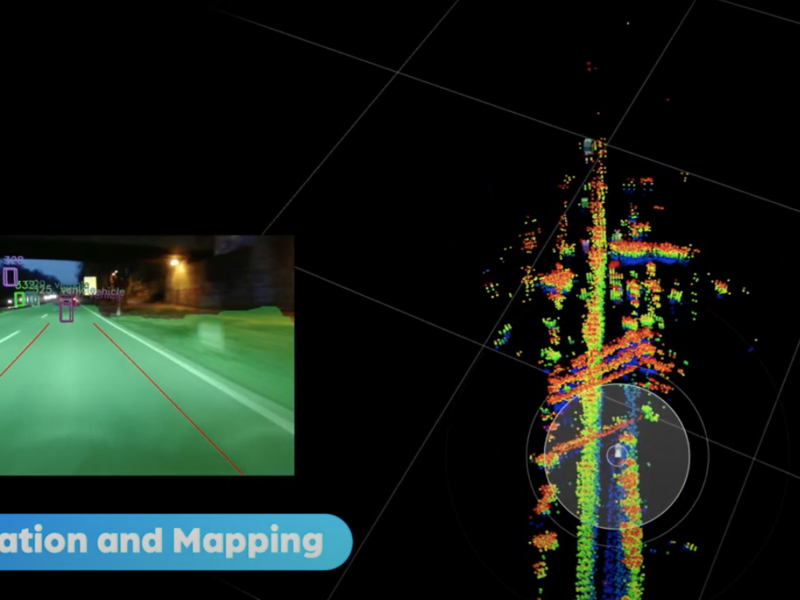

The new algorithm now sees unobstructed or drivable areas around the vehicle and locates the vehicle within that mapped space. And it does this in concert, or in “sensor fusion,” with on-board camera inputs.

“A key element in the perception of the car is being able to understand which areas are free and which are occupied by another car or a guardrail,” explains Ram Machness, chief business officer at Tel Aviv-based Arbe. “Nobody till now was able to use radar for free-space mapping. The current generation of [competitor] radars cannot provide free-space mapping. And today’s radar ignores stationary objects and the size and height of objects. They lack resolution.”

In contrast, Arbe claims its 4D radar sensors measure distances, elevations and the direction of moving and stationary objects at longer ranges (up to 500 meters) and with a wide field of view in all weather and lighting conditions. Previously, most systems relied solely on camera algorithms for free-space mapping, with an accuracy range of just 160 to 200 meters, under ideal conditions.

Distances sensed with cameras alone can be considerably shorter during poor-light and bad-weather conditions. And while camera-only solutions work reasonably well with Level 2 autonomous systems (driver attention always required), most experts believe that sophisticated Level 4 and 5 autonomous operation demands the more robust all-weather and all-lighting integrity of radar-supported free-space mapping. (In Level 3 systems, the driver might be required to take control under “challenging” conditions. In Level 4 and 5, driver intervention might be required but is not assumed to be available.)

Machness says Arbe’s fusion of camera and ultra-high-resolution radar crisply separates stationary targets from moving ones and helps eliminate false positives that lead to unnecessary heavy braking. He acknowledges that sophisticated lidar (light detection and ranging) is also a viable high-resolution perception system that can be used redundantly with perception-system cameras for free-space detection. But he questions using lidar given its stubbornly high cost, its greater processing requirements and its stunted range (100 meters or less) in poor weather.

“But for me, the worst point regarding lidar is that the cost is simply too high to use on a mass-market car, at least for the next three to five years,” Machness says. “It’s not an option. And it suffers from some of the same disadvantages as a camera in direct sunlight, fog and rain. The revolution here is that you can now use radar and camera fusion for free-space mapping.”

CES has also selected Arbe as a 2022 Innovation honoree in the Vehicle Intelligence & Transportation product category for its affordable, ultra-high-resolution “Phoenix” Perception Imaging Radar with free-space mapping. In September 2021, Arbe earned an Automotive News PACEpilot award.